Current Opinion on Animal Pose Estimation and Behavior Analysis Tools

Introduction

Animal pose estimation tools have become increasingly popular in recent years, as they provide a way to accurately track and measure the movement and behavior of animals. Researchers have identified a number of benefits to using these tools, including providing accurate and detailed data that can be used to study animal behavior and inform conservation efforts. However, there are still some challenges in using these tools, including accuracy and cost. In this document, we will explore current opinion on animal pose estimation tools, looking at both the advantages and disadvantages of using them.

Video based markerless pose estimation is a powerful tool for quantifying animal behavior, as it allows for an unobtrusive, non-invasive observation of the animal's actions, with minimal setup requirements. Using pose estimation, it is possible to extract key points across the animal's body, allowing for the approximation of its motion patterns. Different behaviors are associated with distinct motion patterns; for instance, a mouse's "investigative" behavior may involve a sequence of actions such as "stop walking, look around and sniff". Once the pose estimation of the animal is obtained across all video frames, the body points can be plotted as time series data. By further analyzing the time series data, it is possible to gain further insight into the animal's behavior; for example, the duration or frequency of certain behaviors can be observed, which can be used to gain a better understanding of the animal's behavior in its natural environment.

Current popular animal pose estimation tools include DeepLabCut and OpenPose. DeepLabCut uses deep learning models to accurately track and measure an animal's pose, while OpenPose is a more general framework that can be used to track the movement of any animal. Both tools provide detailed and accurate data that can be used to study animal behavior and inform conservation efforts. However, both tools are relatively expensive and require significant computing resources, which can be a barrier for smaller organizations. Additionally, accuracy can be an issue with both tools, as they can sometimes have difficulty tracking certain animals or complex poses.

List of Current Technologies

Below we have summarized 📚 our findings in a table that evaluate each method on the basis of

Code Availability

Documentation (Doc)

Scalable or not

Last Time Updated

Dataset Availability

Open Source

Real-time Performance (RP)

User-friendliness (UF)

GUI Availability

Reproducibility (RP)

Review

| Tools | Code | Dataset | UF | Doc | Open-Source? | GUI | scaleable | RT | RP | Updated |

|---|---|---|---|---|---|---|---|---|---|---|

| DLC | Available | N/A | 90 | 80 | YES | Yes | Scalable | 50 | 90 | 2021 |

| SLEAP | Available | N/A | 85 | 65 | YES | Yes | Scalable | 90 | 90 | 2021 |

| Anipose | Available | N/A | 80 | 60 | YES | N/A | Highly | 10 | 60 | 2020 |

| DANNCE | Available | Available | 60 | 50 | Partially | N/A | Scalable | 10 | 50 | 2022 |

| AnyMaze | N/A | N/A | 100 | 100 | NO | Yes | Highly | 100 | 100 | 2022 |

| DeepPoseKit | Available | N/A | 60 | 60 | YES | Incomplete | Scalable | 90 | 80 | 2022 |

| DeepEthogram | Available | Available | 50 | 50 | YES | Yes | Scalable | 90 | 80 | 2021 |

| MARS | Available | Available | 70 | 80 | Partially | Yes | Scalable | 80 | 80 | 2021 |

| VAME | Available | Available | 40 | 70 | YES | N/A | Scalable | 20 | 90 | 2022 |

| B-SOiD | Available | Available | 80 | 80 | YES | Yes | Highly | 90 | 85 | 2021 |

| BehaviorAtlas | Available | Available | 40 | 50 | Partially | N/A | Scalable | 50 | 30 | 2021 |

| Skeletal Estimation | Available | Available | 60 | 70 | YES | N/A | Scalable | 20 | 85 | 2023 |

| AVATAR | N/A | N/A | 10 | 10 | NO | N/A | Scalable | 95 | 10 | 2022 |

Overview

When evaluating these above mentioned tools, there are several important criteria to consider such as user-friendliness, code availability, reproducibility, documentation, and real-time support.

For user-friendliness, the technologies should be easy to use and understand, with minimal setup and configuration requirements. The code should be readily available and open source, allowing for maximum flexibility and allowing users to modify the code to their needs. Additionally, the code should be well-documented and easy to understand, with clear instructions on how to use the tool.

Reproducibility is also an important criterion, as it allows for results to be easily replicated and verified. Good documentation and code availability can help ensure reproducibility, while also making it easier for users to review and understand the results.

Additionally, real-time support is an important factor in evaluating animal pose estimation tools, as it can have a major impact on the usability and accuracy of the tool in applications that require real-time feedback.

Overall, each of these criteria should be taken into account when evaluating animal pose estimation tools. By considering these criteria, users can make an informed decision about which tool is best for their needs.

DeepLabCut

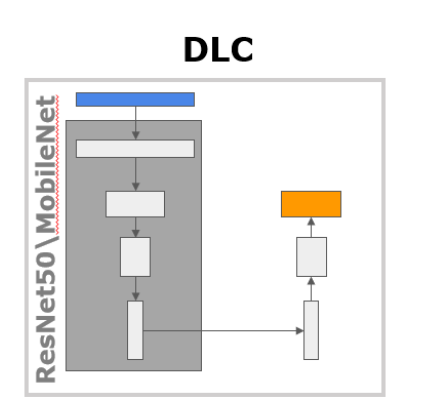

DeepLabCut is based on the concept of Transfer Learning and uses ResNets, similar to those employed in DeeperCut, for feature extraction. As compared to DeeperCut, DeepLabCut has omitted certain elements such as Pairwise Refinement and Integer Linear Programming, resulting in a faster inference speed. DeepLabCut has been tested to work across a range of animals with distinct morphology from humans. Additionally, it is an integral component of various above mentioned pose estimation pipelines that will be described later on. The overall architecture of the DeepLabCut is shown below:

- The DeepLabCut (DLC) is a highly popular and widely utilized tool in the scientific community for markerless tracking. As such, it has a comprehensive amount of documentation, as well as a large support community for seeking assistance and resolving any issues that may arise.

- The predictions are quite accurate.

- The GUI interface is user-friendly and also includes a very intuitive labelling functionality to label keypoints and body parts.

- The bult-in functionality supports transcoding videos that are very helpful to visualize the prediction results that are

- DLC utilizes large models such as ResNe50 and MobileNet, resulting in a slow inference time that is not appropriate for real-time applications. While DLC Live is available for real-time inference, it is not commonly utilized by many methods that are already developed with offline DLC as their baseline model prior to DLC Live release.

- The 3D reconstruction currently only supports two cameras, and there are some drawbacks to the 3D pose estimation. For example, it can be difficult to accurately determine the exact position and orientation of an object in 3D space. Additionally, the accuracy of the reconstruction can be affected by the quality of the camera images. Finally, the 3D reconstruction process can be computationally intensive.

- Training time can be lengthy. Although there are many pre-trained models available on the ModelZoo, it can be difficult to find one that meets one’s specific needs.

SLEAP

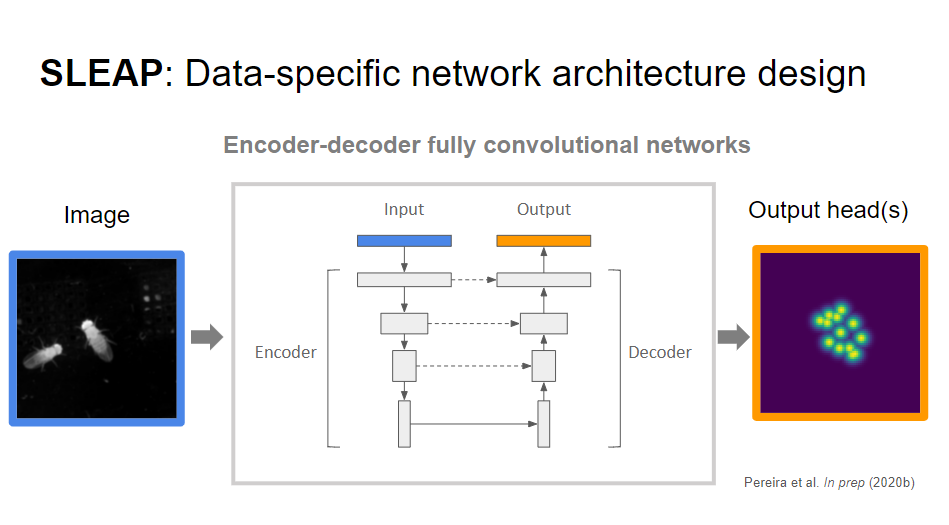

LEAP and SLEAP, developed by another group, are two of the alternatives to DeepLabCut that have been proven to achieve great performance for animal tracking. LEAP is based on an iterative, human-in-loop training scheme. A subset of frames from a video is extracted and manually labeled using an interactive GUI. The initial pose estimates on a portion of the frames can be predicted using this labeled data (~10 frames). Then, the original labels, along with manually refined predictions, can be iteratively trained to predict new frames. This process is repeated until the desired pose estimation performance is achieved.

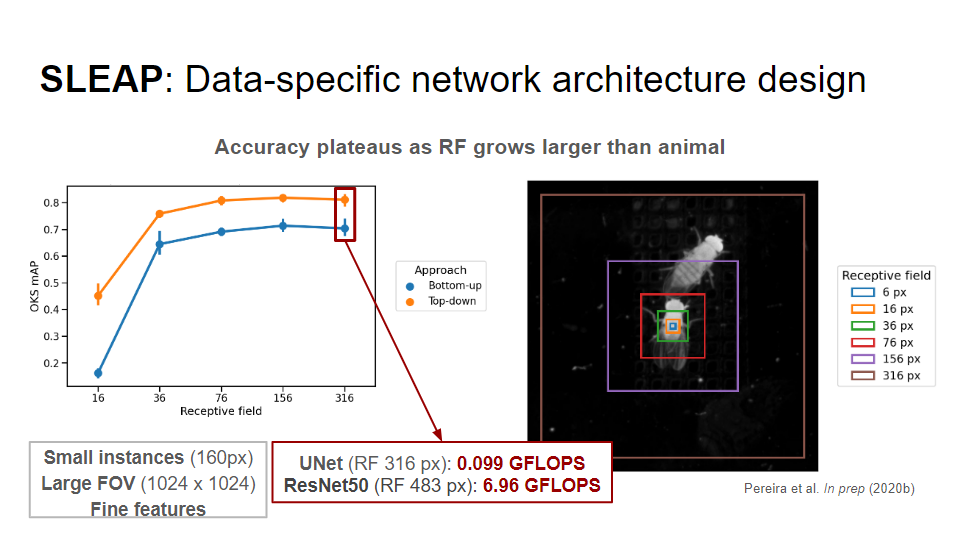

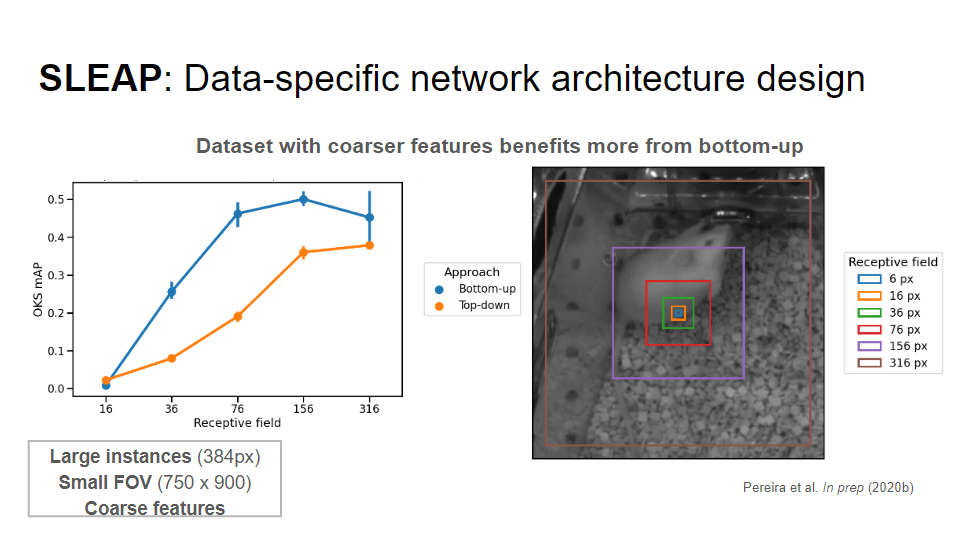

SLEAP is an extended version of LEAP for multi-animal tracking. The major thing that distinguishes SLEAP from DLC is its choice of architecture. Instead of relying on larger models like ReseNet and MobileNet, SLEAP uses a set of small specialized UNet-based data-specific architectures that specialize in key points detection in smaller and larger FOV subjects with fine and coarse features like rats/Mice and Flies respectively.

The architecture used in SLEAP and the overall schema is shown below:

- Suitable for Real-time application.

- Smaller Architecture footprint that is faster to train.

- Accurate Predictions

- The GUI interface is user-friendly and also includes a very intuitive labeling functionality to label keypoints and body parts.

- Predicted keypoints visualization in a transcoded video

- Good for multi-animal pose estimation with a bottom-up approach while preserving the animal's identities.

- Doesn’t utilize transfer learning, therefore larger training data is required for better predictions and acceptable real-time application accuracy

- Doesn’t support 3D reconstruction.

- Not as well documented and supported as DLC

having reviewed two of the most fundamental components in current pose estimation methods, we can describe and analyze the rest of the solutions listed above. Most of these use DLC and/or SLEAP as their baseline.

Anipose:

Anipose is a modular solution for animal pose estimation, consisting of a 3D calibration module, a set of filters to resolve 2D detection errors, a triangulation module to obtain accurate 3D trajectories, and a pipeline for efficient video processing.

Anipose extends the DLC to include support for more than 2 cameras. It is recommended to use 3-6 cameras for recording the subject from different angles for an accurate 3D reconstruction of the 2D pose estimation. However, including more cameras means more frames to label and even longer training time.

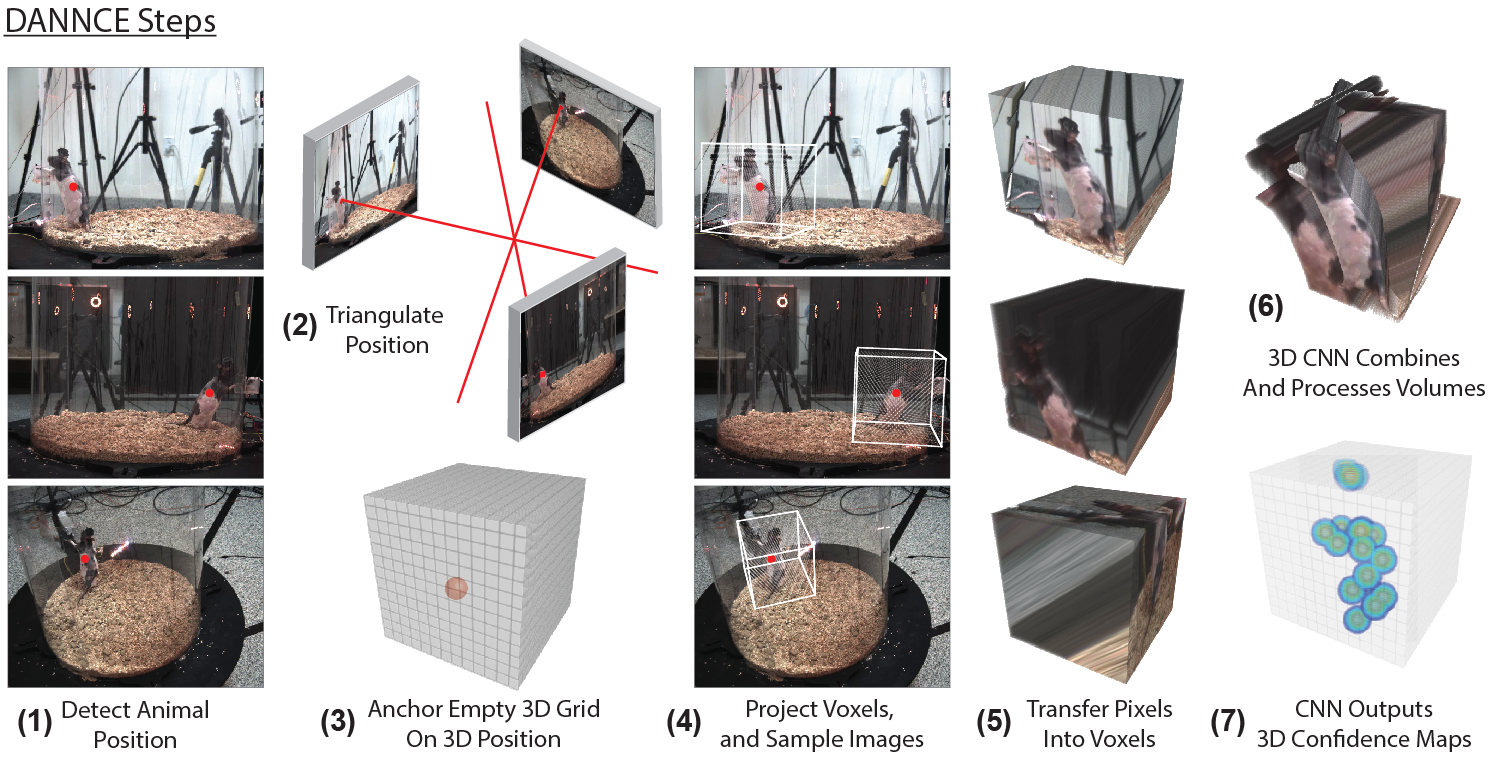

DANNCE

DANNCE is a 3D approach designed to track anatomical landmarks in various species and behaviors, using projective geometry and a convolutional neural network to construct inputs. It has been trained and benchmarked using a dataset of nearly seven million frames and has been extended to datasets from rat pups, marmosets, and chickadees, allowing for the quantitative profiling of behavioral lineage during development.

Unlike the rest of the methods that use 2D key points input data taken from multiple views for triangulation to construct 3D poses, DANNCE uses projective geometry to construct a 3D input from 2D keypoints to train a 3D CNN for directly predicting 3D poses. This has been shown to greatly increase robustness.

It is important to notice that DANNCE uses its own developed labeling tool that can label frames taken from 6 camera views at the same time.

- It reduced the amount of labeling time significantly.

- It creates a spatial consistency between the one keypoint across all views. mean if you move key point in one view it also moves in the other view. This means that key points in all views accurately represent a single point in 3D space. and the 3D reconstruction error is greatly reduced.

- The second most important advantage is that it enables users to label the obstructed keypoints/body parts accurately which is not possible to label in the rest of the pose estimation tools where we have to label the hidden parts based on guess or leave them altogether.

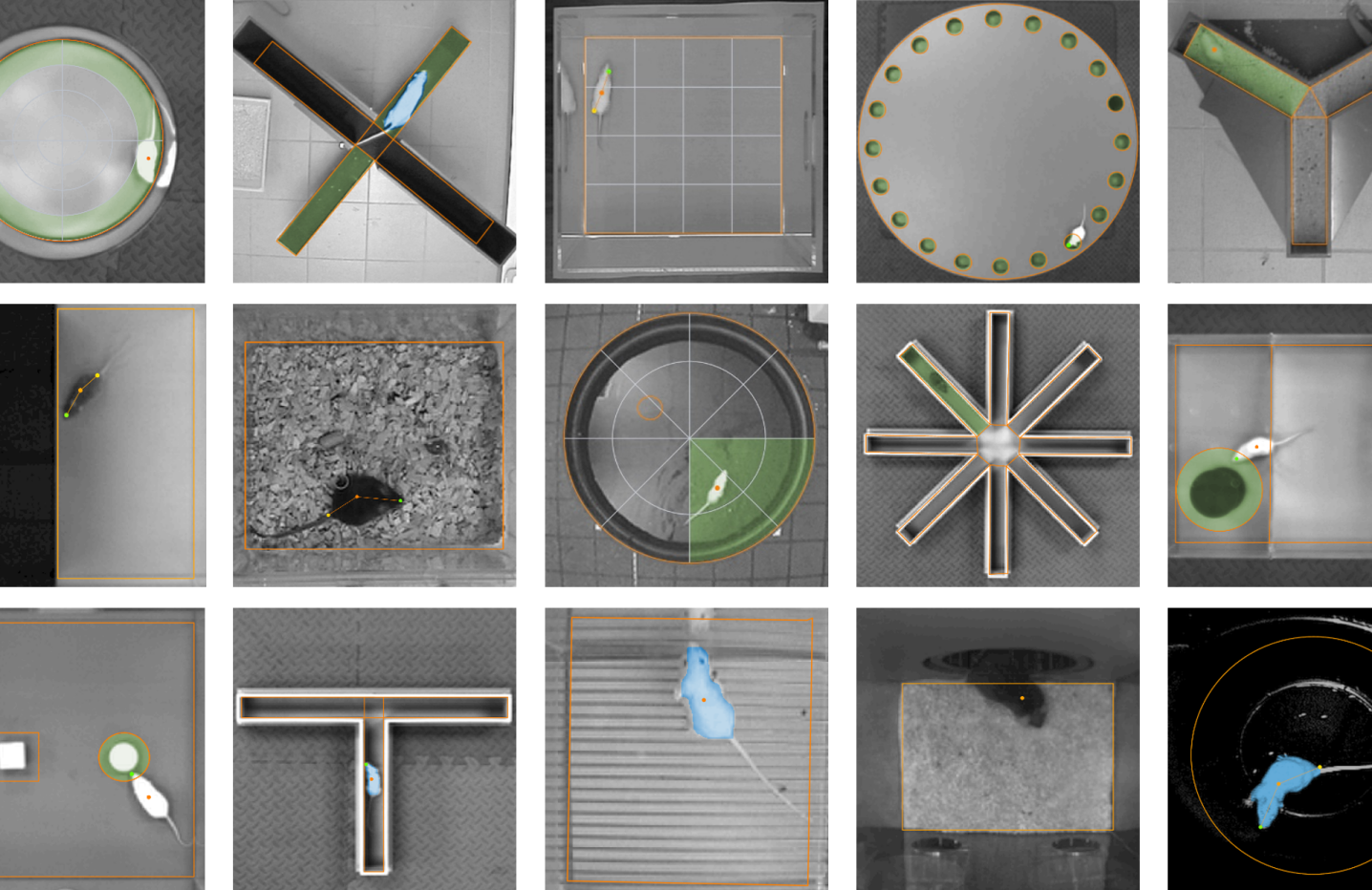

AnyMaze

AnyMaze is a commercial software for tracking rats in different lab environments and mazes. It comes equipped with a very familiar interface resembling Microsoft Office. As a commercial solution, It is designed to control, record, and analyze animal behavior in laboratory experiments. It can be used to conduct a variety of behavioral assays, including operant conditioning, classical conditioning, and passive avoidance tasks.

The software provides a user-friendly interface for controlling stimuli presentation and recording animal behavior, and it also includes analysis tools for quantifying behavioral data. It is widely used in neuroscience and psychology research to study a range of topics, including learning and memory, attention, and decision making.

AnyMaze is developed to be used for a variety of laboratory animals, including rodents (such as mice and rats) and larger animals like non-human primates. The software is highly flexible and can be adapted to suit the needs of different experimental paradigms and species. The system can be used to control and record behavior in both simple and complex tasks,

Despite not having the capability to perform pose estimation. It can be used for almost all the standard set of tasks and tests performed on laboratory animals.

AnyMaze can be used to automate tests and run them in batches on up to 40 different apparatus simultaneously therefore, greatly increasing the throughput.

AnyMaze is a No-code platform thus no programming experience required

DeepPoseKit

DeepPoseKit software is designed to be fast, flexible, and robust, with a high-level programming interface and graphical user-interface for annotations. The software is built using TensorFlow as a backend and is written in Python. The authors have also developed two new models for animal pose estimation, Stacked DenseNet and a modified version of Stacked Hourglass. These models are designed to be more efficient and accurate than previous models. It comes equipped with a newly developed method to process model outputs called Subpixel Maxima to allow for fast and accurate keypoint predictions with subpixel precision. The proposed models incorporate a hierarchical posture graph to learn the multi-scale geometry between keypoints. The software package, models, and method have been compared to existing models and shown to perform well in terms of speed, accuracy, training time, and generalization ability. The code, documentation, and examples are available on GitHub.

- It offers a labeling UI, but for the remaining operations such as training, selecting parameters and models, extracting frames, and visualizing results, one must use the code base provided in the Google Colab notebook..

- Installing a complex development environment can be a barrier to getting started with this tool. However, it's worth the effort, as it will enable you to take full advantage of the tool's features.

DeepEthogram

DeepEthogram is a tool that uses videos to predict the probability of certain behaviors. To use it, you must first train the flow generator on a set of videos without user input. Then, you must label each frame in a set of training videos for the presence of each behavior of interest. After that, the spatial and flow feature extractors will use the labels to create separate estimates of the probability of each behavior. The extracted feature vectors are then used to train the sequence models to make the final predictions. The models are trained in series by design, instead of all at once, to avoid overfitting and other issues.

DeepEthogram employs a variety of deep neural networks to generate optic flow, compress the frames into features, and estimate the probability of each behavior. Its system consists of three versions that use different models, depending on the accuracy and speed required. For example, the first version of DeepEthogram utilizes convolutional neural networks to identify motion and structure in the frames and generate a prediction. The second version combines convolutional neural networks and long short-term memory networks, which are recurrent neural networks capable of remembering information from previous frames. The third version uses a combination of convolutional neural networks and support vector machines to process the frames and generate robust predictions.

.

MARS

MARS is a system that uses deep learning to automatically detect and classify the behavior of mice in videos. It includes three tools: MARS itself, MARS_Developer, and BENTO.

- MARS itself is a pipeline for detecting and classifying the behavior of mice in videos, and comes with a pose estimator and behavior classifiers.

- MARS_Developer is a tool for re-training MARS on new data.

- BENTO is a tool for managing, visualizing, and analyzing data, including neural recordings, videos, behavior annotations, and audio.

Thus, It’s an end-to-end computational pipeline for tracking, pose estimation, and behavior classification in interacting laboratory mice. MARS can detect attack, mounting, and close investigation behaviors in a standard resident-intruder assay.

MARS also includes a MATLAB-based GUI (BENTO)for synchronous display of neural recording data, multiple videos, human/automated behavior annotations, spectrograms of recorded audio, pose estimations, and other relevant information

MARS can be used to monitor animal behavior over long periods of time. It has the ability to track and measure parameters such as speed, acceleration, and direction. Additionally, the software can detect subtle changes in behavior that may not be easily visible to the naked eye. The data collected can be used in a variety of ways, such as to explore the impact of environmental factors on animal behavior or to identify potential changes in behavior that may be indicative of disease.

MARS comes pre-packaged with a pose estimator trained on manual keypoint annotations of 15,000 video frames of interacting mice, and a set of behavior classifiers trained to detect aggression, mounting, and close investigation behaviors.

VAME

VAME is a PyTorch-based deep learning framework for clustering behavioral signals obtained from pose-estimation tools like DLC. It leverages the power of recurrent neural networks (RNNs) to model sequential data. To learn the complex data distribution, we use the RNN in a variational autoencoder (VAE) setting to extract the latent state of the animal at each step of the input time series.

It consists of three bidirectional recurrent neural networks, to identify behavioral motifs. and uses a Hidden-Markov-Model to segment the continuous latent space into discrete behavioral motifs.

B-SOiD

Similar to VAME, B-SOiD is an open-source, unsupervised algorithm that is designed to identify behavior patterns without user bias. It take pose estimation from DLC but uses a set of unsupervised algorithms different from VAME. It extracts features from these poses through their define algorithms and utilized UMAP for non-linear dimensionality reduction.

Contrast to VAME B-SOiD comes nicely packages as a python streamlit app that is intuitive to run.

BehaviorAtlas

Behavior Atlas is a spatio-temporal decomposition framework for detecting behavioral phenotypes from 3D/2D continuous multidimensional motion features data input. It unsupervisedly decomposes movements (e.g. walking, running, rearing) and emphasizes temporal dynamics. The self-similarity matrix of movement segments describes structure, and enables dimensionality reduction and visualization to construct feature space. This helps to study evolution of movement sequences, higher-order behavior and behavioral state transitions.

It comes equipped with a 3D pose estimation code that utilized DLC tracked 2D poses from multiple cameras to construct 3D pose using a MATLAB toolbox.

BehaviorAtlas is specifically designed to analyze rodent behavior, which has a lot of variation and is hard to measure. It uses a two-step process to break down the continuous animal skeleton postural data into two parts: locomotion and non-locomotor movement. Then, applies a unsupervised clustering to figure out the structure of the behavior. The results are visualized using UMAP

Skeletal Estimation

In order to discuss the disparity between the limb kinematics detected by surface tracking using DLC and the underlying skeletal motion, This method utilizes anatomical constraint model to restrict the poses estimation markers into a skeletal and utilizes (Inertial Measurement Unit) IMU readings and (Magnetic Resonance Imaging) MRI scans to verify results.

It relies on DLC for 2D pose estimation which is then lifted to 3D via triangulation and then combined with anatomical constraint model to do skeletal estimation.

By inferring to the skeleton based model constrained using anatomical principles such as joint motion limits, bone rotations, and temporal constraints; it can help better estimate the underlying skeletal of rat with different sizes.

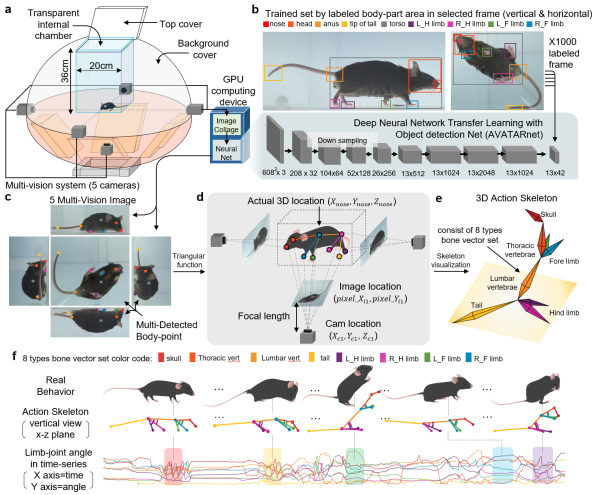

AVATAR

AVATAR, based on YOLOv4, is used to detect body parts on rats. These detected body parts are then used for 3D reconstruction with off-the-shelf computer vision algorithms. The results of the 3D reconstruction are visualized through an "action skeletal" of polygons, created by joining the detected 3D parts.

For behavior's classification, it uses LSTM models.

Although being straightforward in nature, the setup was tested to derive phot stimulation rat’s ventral tegmental area (VTA) within 90ms latency.

Final Thoughts

There are a variety of methods available for animal pose estimation and behavior analysis. Each of these methods has its own unique advantages and disadvantages. DeepEthogram is a convolutional neural network-based system that is geared towards accuracy and speed. MARS combines both convolutional neural networks and long short-term memory networks for robust predictions. VAME and B-SOiD are unsupervised algorithms for clustering behavioral signals from pose-estimation tools like DLC. BehaviorAtlas separates skeletal kinematics into locomotor and non-locomotor movements for clustering rodent behavior. Skeletal Estimation utilizes an anatomical constraint model to restrict the poses estimation markers into a skeletal. Finally, AVATAR is a YOLOv4-based system that is used to detect body parts on rats and classify behavior using LSTM models.

We have compiled an exhaustive list of high-impact papers on animal neuroethology for a comprehensive literature review.

No matter which method you choose to use, it is important to consider the pros and cons of each system carefully. Additionally, it is important to consider the resources that are required to use each system and the time it takes to train and run the model. Ultimately, the best system for your application will depend on the data that you have, the accuracy and speed that is required, and the resources that are available.

In the near future, animal pose estimation is likely to become more accurate and efficient due to continued research and development. This could revolutionize the way we study animals in their natural habitat and in the laboratory.